I’m not an “AI Engineer” by trade. I can’t speak on the technical aspects of neural networks, machine learning, or deep learning, and wouldn’t claim any specialist mastery over those topics. Yet as a software engineer, it’s nearly impossible now to be able to build products without embedding large language models in the product stack. Whether that makes the product automatically agentic, I am not sure. That, however, isn’t what this blog is about.

It took me a while to appreciate how the architecture of a product that leverages an LLM is different than that of a product that does not. My challenges weren’t really about frameworks or coding details; any LLM can produce that; but about the bigger conceptual picture; which is understanding what LLMs are, how they carry a conversation along, how to coax them to limit the options they provide in a structure you want and how to work around their quirks.

The more time I spent engaging with LLMs, even if I was treating them as a black box, the more a peculiar, persistent connection to Christopher Nolan’s Memento and its protagonist’s unique memory struggles began to take root in my mind.

The Memento Analogy

In Nolan’s Memento, Leonard Shelby suffers from a rare condition; he can’t form new memories after a traumatic head injury. While his long-term memory is intact, his short-term memory resets every few minutes. To survive, Leonard covers himself with tattoos and Polaroids; painstaking reminders of facts he can no longer hold in his mind. This storytelling device not only makes for a gripping narrative, but to me served as striking metaphor for large language models and how we, as AI builders, must work with them.

1. Frozen in Time

Leonard’s world is defined by everything he knew before his injury, which is his childhood, his job, his wife. Similarly, LLMs are trained on vast pools of text and data, but all of them are frozen as of their last training cutoff date. Once trained, the model doesn’t know about anything that’s happened since. Like Leonard, LLMs operate with an encyclopedic but time-locked memory. And yes, fine tuning exists — Please let’s not let some technical details come in the way of a good analogy.

2. Learning from Context

Each moment, Leonard must reconstruct his reality using notes, tattoos, and labeled photos. He never carries over context in the way most of us do. The parallel is uncanny: LLMs begin every request stateless, unless we supply context. Each prompt is an opportunity to feed in knowledge; facts, questions, or prior conversation; just as Leonard consults his notes before making decisions.

3. Externalizing Memory

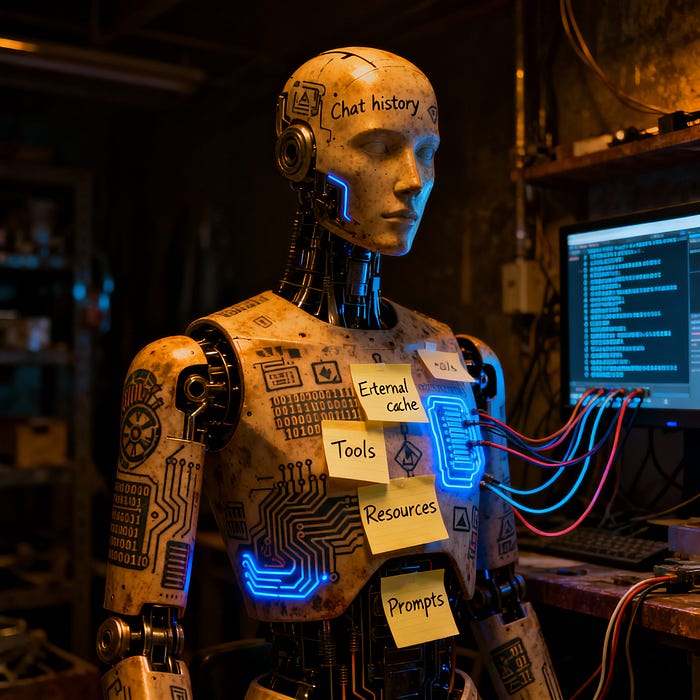

Leonard externalizes essential knowledge, tattooing key clues on his body and taping facts to walls. Without these aids, he would have no continuity. LLM-powered applications must perform a similar trick: everything new or critical must be stored outside the model, then carefully reintroduced each time. Whether it’s chat history, databases, or caches, we’re essentially tattooing our systems so the model can “remember.”

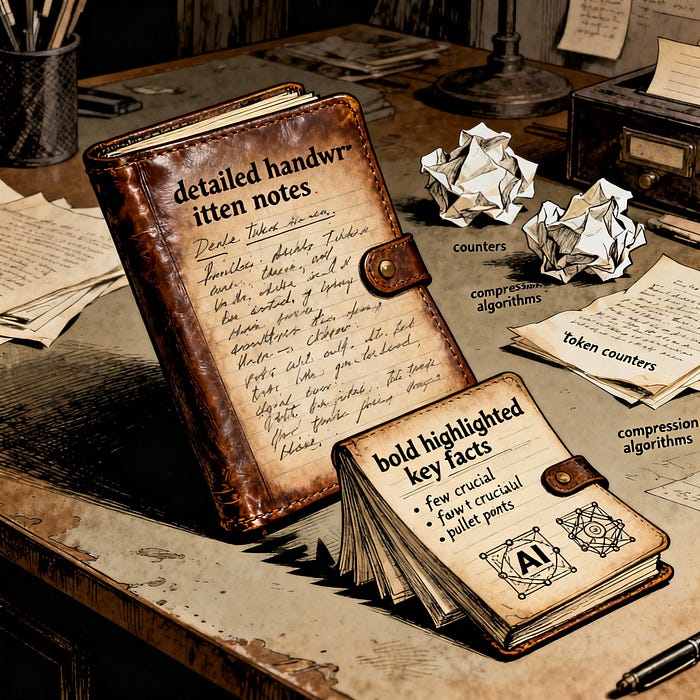

4. The Limits of Storage

But Leonard runs out of skin to tattoo; eventually, he must prioritize what’s essential. Likewise, AI systems hit hard limits: context windows are finite, and we must summarize or compress information if a session grows too complicated. There’s an art and discipline to know which details to keep and which to let go of. You keep too little, and the LLM will produce less than optimal content; You keep too much, and the context overflows. Context windows are essentially tokens and tokens are money.

Why The Analogy Matters

This comparison isn’t just a neat storytelling device. It captures a practical truth; building LLM applications requires constant vigilance about what the model can “know” at any given time. We, as designers and engineers, must play the role of Leonard’s past self; planning, curating, and structuring memory so it survives through each back-and-forth of a conversation. The work is about building a mind that learns continuously, but one that must be carefully reminded, orchestrated, and sometimes even protected from itself.

Just as Memento forces its protagonist to re-live the present, LLMs live perpetually in the now. They are extremely helpful, but only within the boundaries of the context provided and reminders. Understanding this limitation improves an engineer’s understanding of what needs to be built. It also shapes product architecture, UX, and user expectations.

Leonard’s Tools

Every term, framework, and standard you hear in the context of Agentic AI is, in a sense, a tool that Leonard has already used. Retrieval-Augmented Generation (RAG) is just his Polaroids, pulled out at the right moment. Model Context Protocol, vector databases, session managers, caches, embeddings, orchestration frameworks, all of these are tattoos on the body of our systems, externalizing memory so the LLM, when fed this information in a controlled manner relevant to the question asked, can reconstruct meaning in real time.

Our job as engineers is to build the scaffolding around it: the reminders, the selective notes, the carefully timed prompts. The real craft lies in delivering the right context, in the right size, at the right time.

The more we refine these external memory systems, the closer we come to turning Leonard’s fragmented existence into something coherent.